25 Apr 2022

Update 2023-04-21: Everything here describes a hacky solution. The same has now been done properly by the pyodide_http project.

In the previous post I showed how shimming the Python module requests was done.

In the meantime I have made processing binary responses possible, using a slightly weird browser feature that probably still exists for backward compatibility reasons.

Since the requests API is a simple blocking Python call, we can’t use asynchronous fetch calls.

This means XMLHttpRequest is the only (built-in) option to perform our HTTP requests in JavaScript (from Python code).

So the two challenges are that the requests need to be done with XMLHttpRequest, and they should be synchronous calls.

Normally, if you want to do something with the raw bytes of an XMLHttpRequest, you would simply do:

request = new XMLHttpRequest();

request.responseType = "arraybuffer";

// or .responseType = "blob";

However, if this responseType is combined with the async parameter set to false in the open call, you get the following error (and deprecations):

request = new XMLHttpRequest();

request.responseType = 'arraybuffer';

request.open("GET", "https://httpbin.org/get", false);

request.send();

// Synchronous XMLHttpRequest on the main thread is deprecated because of its detrimental effects to the end

// user’s experience. For more help http://xhr.spec.whatwg.org/

// Use of XMLHttpRequest’s responseType attribute is no longer supported in the synchronous mode in window context.

// Uncaught DOMException: XMLHttpRequest.open: synchronous XMLHttpRequests do not support timeout and responseType

The Mozilla docs provide helpful tricks for handling binary responses, back from when the responseTypes arraybuffer and blob simply didn’t exist yet.

The trick is to override the MIME type, say that it is text, but that the character set is something user-defined: text/plain; charset=x-user-defined.

request.overrideMimeType("text/plain; charset=x-user-defined");

request.responseIsBinary = true; // as a custom flag for the code that needs to process this

The request.response we get contains two-byte “characters”, some of which are within Unicode’s Private Use Area. We will need to strip every other byte to get the original bytes back.

Note that the following code block contains Python code made for Pyodide. The request object is still an XMLHttpRequest, but it’s accessed from the Python code:

def __init__(self, request):

if request.responseIsBinary:

# bring everything outside the range of a single byte within this range

self.raw = BytesIO(bytes(ord(byte) & 0xff for byte in request.response))

Even though this works right now, some concessions have been made to achieve the goal of performing HTTP requests from Pyodide.

The worst concession is running on the main thread, with the potential of freezing browser windows.

The future of this project is to write asynchronous Python code using aiohttp, and shim aiohttp to use the Javascript fetch API.

To see all these things in action, check the current state of shimming requests on Github: bartbroere/requests#1

Update 2023-04-20: I’m no longer maintaining and hosting a custom Pyodide build to demonstrate it. The link to it has been removed.

05 Nov 2021

Update 2023-04-21: Everything here describes a hacky solution. The same has now been done properly by the pyodide_http project.

The Pyodide project compiles the CPython interpreter and a collection

of popular libraries to the browser. This is done using emscripten and results

in Javascript and WebAssembly packages.

This means you get an almost complete Python distribution, that can run completely in the browser.

Pure Python packages are also pip-installable in Pyodide, but these packages might not be usable if they (indirectly)

depend on one of the unsupported Python standard libraries.

This has the result that you can’t do a lot of network-related things. Anything that

depends on importing socket or the http library will not work. Because of this, you can’t use the popular library

requests yet.

The project’s roadmap has

plans for adding this networking support,

but this might not be ready soon.

Therefore I created an alternative requests module specifically for Pyodide, which bridges the requests API and makes

JavaScript XMLHttpRequests. I’m currently developing it in a fork at

bartbroere/requests#1.

Helping hands are always welcome!

Since most browsers have strong opinions on what a request should look like in terms of included headers and cookies,

this new version of requests will not always do what the normal requests does. This can be a feature instead of a bug.

For example, if the browser already has an authenticated session to an API, you could automatically send authenticated requests

from your Python code.

This is the end result, combined with some slightly dirty hacks (in python3.9.js) to make the script MIME type text/x-python evaluate automatically:

<script src="https://pypi.bartbroe.re/python3.9.js"></script>

<script type="text/x-python">

from pprint import pprint

import requests

pprint(requests.get('https://httpbin.org/get', params={'key': 'value'}).json())

pprint(requests.get('https://httpbin.org/post', data={'key': 'value'}).json())

</script>

Hopefully, my requests module will not have a long life, because the Pyodide project has plans to make a more sustainable solution.

Until then, it might be a cool hack to support the up to 34K libraries that depend on requests

in the Pyodide interpreter.

19 Mar 2021

In Python 2 Django prefers using the __unicode__ member of any class to get human-readable strings to its interfaces. In Python 3 however, it defaults to the __str__ member. Porting guides and utilities specific to Django used to solve this by suggesting having a __str__ method, with the python_2_unicode_compatible decorator on the class.

This was a nice enough solution for a long time, for code bases migrating from Python 2 to Python 3 or wanting to support both at the same time.

However, with the official deprecation of Python 2 on January 1st 2020, adding this decorator started making less sense to me.

Now you definitely only should support Python 3 runtimes for Django projects.

As an additional porting utility, I created a fixer for 2to3, that renames all __unicode__ dundermethods to __str__, where possible.

The current status of the fixer util is that I have created a pull request on the 2to3 library (even though I’m not sure whether it will be accepted).

Update: lib2to3 is no longer maintained, so just get the fixer from the diff of the closed pull request if you want to use it.

21 Dec 2020

A year after Python 2 was officially deprecated, 2to3 is still my favourite tool for porting Python 2 code to Python 3.

Only recently, when using it on a legacy code base, I found one of the edge cases 2to3 will not fix for you.

Consider this function in Python, left completely untouched by running 2to3.

It worked fine in Python 2, but throws RecursionError in Python 3.

(It is of questionable quality; I didn’t make it originally).

def safe_escape(value):

if isinstance(value, dict):

value = OrderedDict([

(safe_escape(k), safe_escape(v)) for k, v in value.items()

])

elif hasattr(value, '__iter__'):

value = [safe_escape(v) for v in value]

elif isinstance(value, str):

value = value.replace('<', '%3C')

value = value.replace('>', '%3E')

value = value.replace('"', '%22')

value = value.replace("'", '%27')

return value

But why? It turns out strings in Python 2 don’t have the __iter__ method, but they do in Python 3.

What happens in Python 3 is that the hasattr(value, '__iter__') condition becomes true, when value is a string.

It now iterates over each character in every string in the list comprehension, and calls itself (the recursion part).

But… each of those strings (characters) also has the __iter__ attribute, quickly reaching the max recursion depth set by your Python interpreter.

In this function it was easy to fix of course:

- Either the order of the two

elifs can be swapped

- or we exclude strings from the iter-check (

elif hasattr(value, '__iter__') and not isinstance(value, str))

The more labour-intensive way of fixing it would be rewriting it entirely, since the only thing it actually really does is recursively URL encoding (but for four characters only).

Maybe there’s a (bad) reason it only URL encodes these four characters, so that was a can of worms I didn’t want to open.

Anyway, main lesson for me was: even though Python 2 is gone, you might still need to remember its quirks.

11 Sep 2020

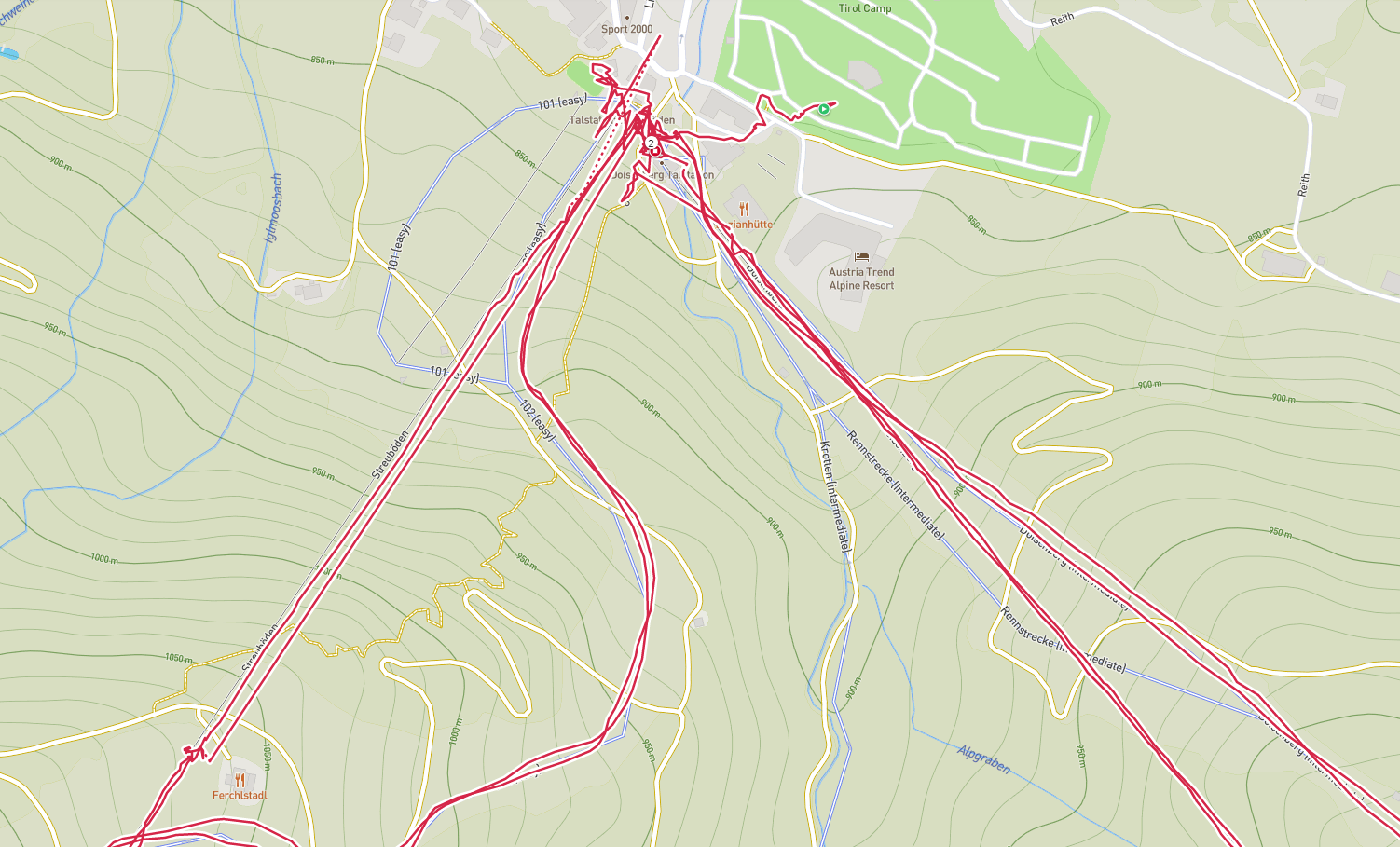

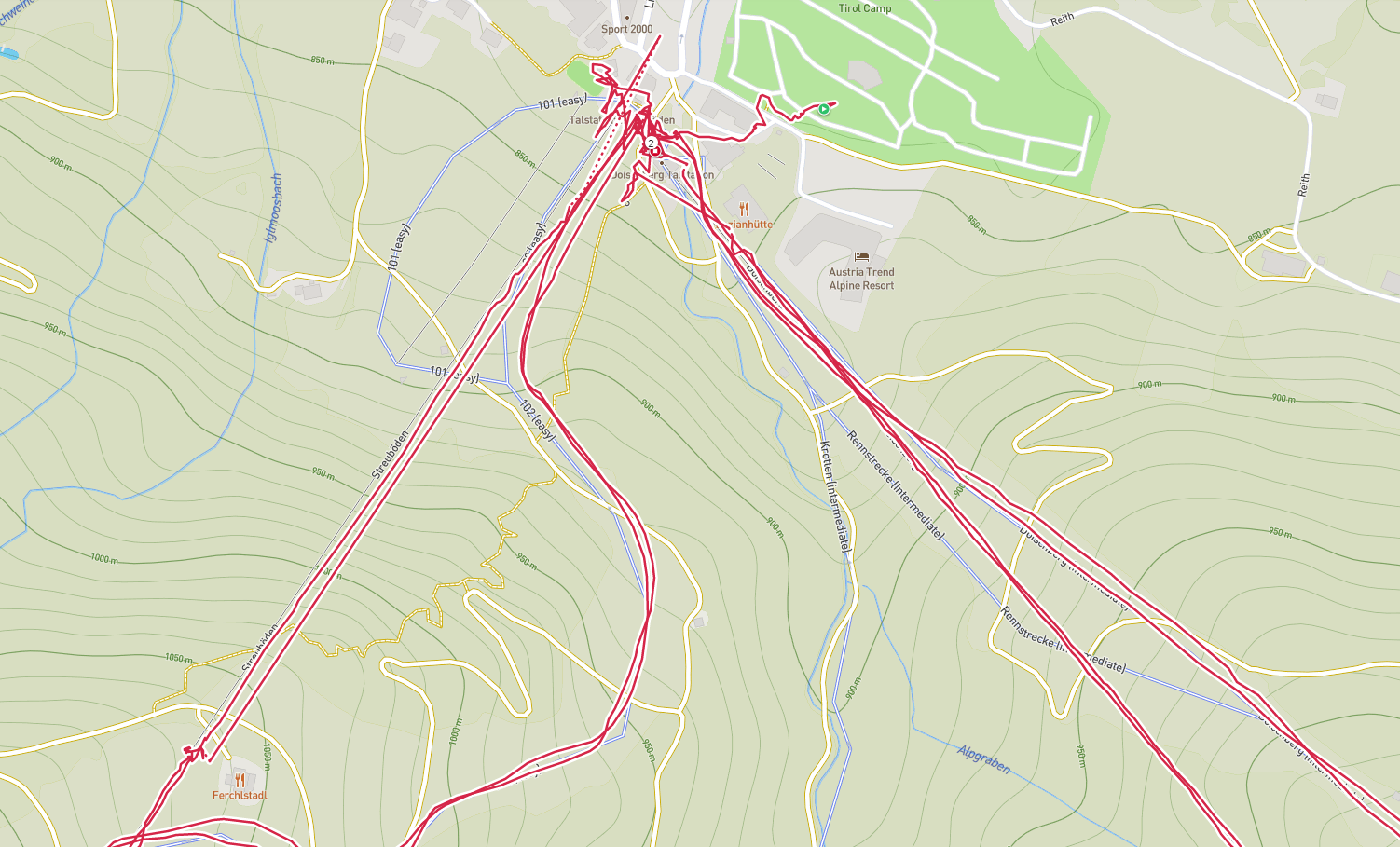

The goal of this notebook is to separate ski lifts from ski slopes, using a set of features and an

external dataset with the ski lifts of the world (OpenSnowMap).

This shouldn’t be too difficult a task, but maybe

just difficult enough to justify some feature engineering and training a classifier.

Separating ski lifts from slopes is useful, since the activity’s statistics (average speed, heart rate etc.)

can be improved by removing the ski lifts from the data.

My secondary goal is trying out datalore.io.

import json

import os

import struct

from base64 import b64encode

from datetime import timedelta

from pprint import pprint

import iso8601

import numpy

import pandas

import untangle

import geopandas

from cachier import cachier

from shapely.geometry import Point, MultiPoint

from sklearn.linear_model import LogisticRegressionCV, LogisticRegression

from sklearn.model_selection import cross_val_score

from sklearn.base import TransformerMixin, BaseEstimator

from sklearn.pipeline import Pipeline

from sklearn.preprocessing import PolynomialFeatures, StandardScaler

from IPython.core.display import HTML

from shapely.ops import nearest_points

from haversine import haversine, Unit

I’m using the output of a Polar Vantage V sports watch. The output is a GPX file with all the

GPS registrations, and a CSV file. This CSV file has the added boolean

column Lift, which is hand-labeled data (True for ski-lift; False for snowboarding or schnapps). These files are loaded in the code

block below and will be the training set.

For this, I’m using the untangle library, which converts XML into native Python objects.

Parsing the relevant parts from OpenSnowMap is quite heavy,

so I serialise it into lifts.jsonl so it doesn’t need to run each time.

[Download lifts.jsonl]

The dataset consists of nodes and ways. A way can have the label “aerialway”, which seem

to be the ski lifts. For each way that has this label, I collect all the nodes and

save them in JSON lines format. The XML parsing is done using untangle again.

if not os.path.exists('data/lifts.jsonl'):

opensnowmap = untangle.parse('data/planet_pistes.osm')

nodes = {node['id']: (node['lat'], node['lon']) for node in opensnowmap.osm.node}

with open('data/lifts.jsonl', 'w') as w:

lifts = []

for way in opensnowmap.osm.way:

try:

for tag in way.tag:

if tag['k'] == 'aerialway':

lifts.append([nodes[nd['ref']] for nd in way.nd])

w.write(json.dumps(lifts[-1]))

w.write('\n')

break

except AttributeError:

continue

if os.path.exists('data/lifts.jsonl'):

lifts = []

lift_points = []

with open('data/lifts.jsonl', 'r') as f:

for i, line in enumerate(f.readlines()):

skilift = json.loads(line)

lifts.append(skilift)

for lat, lon in skilift:

lift_points.append({'lift_id': i,

'lift_point': Point(float(lat), float(lon))})

lift_points = geopandas.GeoDataFrame(lift_points)

In the code blocks below, I add some crafted features.

- speed differences between smoothed and current speed (how constant is the speed)

- altitude changes (going up is more likely to be a ski lift, although not all ski lifts go up)

- distance to closest known ski lift

- (smoothed) alignment with closest known ski lift (TODO)

- curviness (sinuosity index (of the last 10 seconds))

def sinuosity_index(window):

source = list(window)

window = []

for latlon in source:

lat, lon = numpy.frombuffer(bytes(latlon), dtype=numpy.float32)

window.append((lat, lon))

last_lat, last_lon = window[0]

first_lat, first_lon = previous_lat, previous_lon = window.pop()

distance = 0.

for lat, lon in window:

distance += haversine((previous_lat, previous_lon), (lat, lon), unit=Unit.METERS)

previous_lat, previous_lon = lat, lon

try:

sinuosity = (haversine((first_lat, first_lon), (last_lat, last_lon), unit=Unit.METERS)) / distance

except ZeroDivisionError:

sinuosity = 0.

return sinuosity

skilifts_multipoint = MultiPoint(lift_points['lift_point'].tolist())

def distance_to_lift(row):

query, result = nearest_points(Point(float(row['lat']), float(row['lon'])),

skilifts_multipoint)

row['Distance to ski lift (meters)'] = haversine(

(query.x, query.y),

(result.x, result.y),

unit=Unit.METERS

)

return row

class ReadSnowboardingDataset(TransformerMixin, BaseEstimator):

def __init__(self, sinuosity_window=10, altitude_window=15):

self.sinuosity_window = sinuosity_window

self.altitude_window = altitude_window

def fit(self, X):

return self.transform(X)

def transform(self, X):

snowboarding_datasets = []

for snowboarding_filename in X:

snowboarding = pandas.read_csv(snowboarding_filename, skiprows=2)

trackpoints = untangle.parse(snowboarding_filename.replace(".csv", ".gpx"))

start_time = iso8601.parse_date(trackpoints.gpx.metadata.time.cdata)

trackpoints = pandas.DataFrame(

[

{'lat': trackpoint['lat'],

'lon': trackpoint['lon'],

'latlon': numpy.frombuffer(

bytes(numpy.float32(trackpoint['lat'])) +

bytes(numpy.float32(trackpoint['lon'])),

dtype=numpy.float64,

),

'timestamp': iso8601.parse_date(trackpoint.time.cdata)}

for trackpoint in trackpoints.gpx.trk.trkseg.trkpt

]

)

snowboarding['timestamp'] = snowboarding['Time'].apply(str)

del snowboarding['Time']

# TODO proper formatting of timedelta or timestamp using proper utils

trackpoints['timestamp'] = trackpoints['timestamp'] - start_time

trackpoints['timestamp'] = trackpoints['timestamp'].apply(

lambda x: str(x).split('days ')[1][0:8]

).apply(str)

snowboarding = snowboarding.merge(trackpoints, on='timestamp')

snowboarding = snowboarding.apply(distance_to_lift, axis=1)

snowboarding['Sinuosity index'] = snowboarding['latlon'].rolling(self.sinuosity_window).apply(

sinuosity_index, raw=True

)

# TODO alignment with closest ski lift

snowboarding['Altitude change (m)'] = snowboarding['Altitude (m)'].diff()

snowboarding['Altitude change smoothed (m)'] = snowboarding['Altitude change (m)']\

.rolling(self.altitude_window).mean()

snowboarding['Speed smoothed (km/h)'] = snowboarding['Speed (km/h)']\

.rolling(self.altitude_window).mean()

snowboarding['Absolute speed difference between smoothed and current (km/h)'] = \

snowboarding['Speed (km/h)'] - snowboarding['Speed smoothed (km/h)']

snowboarding['Absolute speed difference between smoothed and current (km/h)'] = \

snowboarding['Speed (km/h)'] - snowboarding['Speed smoothed (km/h)']

snowboarding['Relative speed difference between smoothed and current (km/h)'] = \

snowboarding['Absolute speed difference between smoothed and current (km/h)'] / \

snowboarding['Speed (km/h)']

snowboarding_datasets.append(snowboarding)

return pandas.concat(snowboarding_datasets)

Q: What sorcery is happening with the latlon field?

A: pandas currently makes it hard to apply a function on a rolling window, for Series

that are non-numeric [1].

The same goes for rolling calculations that need multiple fields

[2].

Therefore, I mash two 32 bit floats into a single 64 bit float,

and unpack it in the function sinuosity_index.

class SplitFeaturesClass(TransformerMixin, BaseEstimator):

def fit(self, X):

return self.transform(X)

def transform(self, X):

snowboarding_selection = X[

['Altitude change smoothed (m)',

'Speed (km/h)',

'Absolute speed difference between smoothed and current (km/h)',

'Relative speed difference between smoothed and current (km/h)',

'Distance to ski lift (meters)',

'Sinuosity index',

'Lift']].dropna()

movement_features = snowboarding_selection[

['Altitude change smoothed (m)',

'Speed (km/h)',

'Absolute speed difference between smoothed and current (km/h)',

'Relative speed difference between smoothed and current (km/h)',

'Distance to ski lift (meters)',

'Sinuosity index']]\

.replace([numpy.inf, -numpy.inf], 0.)

is_lift = snowboarding_selection['Lift']

return movement_features, is_lift

For this project, I wanted the complexity to be in the feature engineering step, and then just fit

a very simple model (logistic regression). In the code block below, the data is split in the features

and the target classes (X and y respectively in scikit learn terms).

snowboarding_pipeline = Pipeline([

('read_snowboarding_dataset', ReadSnowboardingDataset()),

('split_features_class', SplitFeaturesClass()),

])

snowboarding_filenames = ['./data/Bart_Broere_2020-02-05_14-56-24.csv']

features, is_lift = snowboarding_pipeline.transform(snowboarding_filenames)

# features = PolynomialFeatures(degree=2, interaction_only=True).fit_transform(features)

model = LogisticRegressionCV(max_iter=10000)

model.fit(X=features, y=is_lift)

cross_validated_scores = cross_val_score(model,

X=features,

y=is_lift,

cv=4)

pprint(list(cross_validated_scores))

print(numpy.mean(cross_validated_scores))

[1.0, 0.993849938499385, 0.9876998769987699, 0.9408866995073891]

0.980609128751386

for column, weight in zip(features.columns, list(model.coef_[0])):

print(f"{column}: {weight}")

Altitude change smoothed (m): 3.5423181866480538

Speed (km/h): 0.21813147529809632

Absolute speed difference between smoothed and current (km/h): -0.026170639562450405

Relative speed difference between smoothed and current (km/h): 0.22554038490129336

Distance to ski lift (meters): 0.0046837551993429835

Sinuosity index: 0.21784616373482674

Although the classifier’s performance is quite good already, its robustness could probably be improved by more labeled data.

Currently it’s a classifier that assigns the most value to the altitude change (are we going up?).

If we add down-hill ski lifts, this could probably be improved. This hopefully causes weights to shift to features like how constant the speed is.

This does require more data labeling, which is boring. Searching for better hyperparameters (like the window for curviness)

only makes sense if there’s more training data. With the limited set of training data available now, the hyperparameters can’t be searched

reliably.

O, and I like datalore.io, but I sometimes miss my debugger.